The following are the key take-aways from the AI for Good Summit that took place in May, 2019. In his keynote address, Siemens’ Snabe said that “ever since it began, technology was always based on promises.” He continued that we recognize that data and AI are fundamental tools in solving the grand challenges facing humanity. We therefore need safe and secure collaboration and sharing. AI is not a new invention, but now the possibilities have become endless. Shanghai alone has committed a budget of 18 billion US dollars alone in AI. But what and how are we using this technology in today? Little information available to make decisions. (eg. with medical doctors).

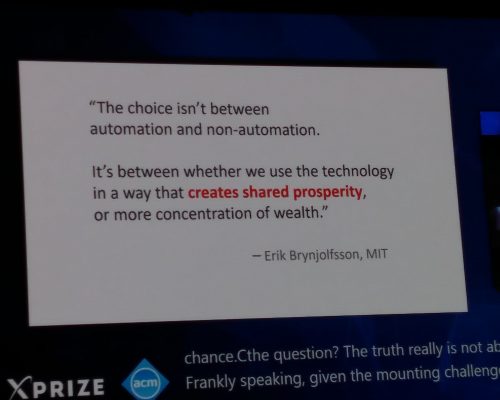

Clearly, we have a pacing problem: AI is accelerating at a pace that is outstripping our ability to deal with its disadvantages. Policy makers need to catch up.We need nimble, decentralized work, working closely across stakeholders for the dissemination of information.”

Human-centric

Clearly, we have a pacing problem: AI is accelerating at a pace that is outstripping our ability to deal with its disadvantages.

One thing we know for sure: the human needs to be at the center of the internet. AI needs good data and good identities. We have to be aware also that the system is watching you as much as you are watching it. One example of this is the issue of identity for refugees – they have different identities depending on where they are in their journey to asylum. Identities can turn easily to government surveillance.

Future blended human AI: likewise, we have to be aware that we are moving towards ecosystems of lifelong learning (students, parents, human teachers etc).

Considerations:

- AI is all around us. Super human performance in various tasks and games. Used to address societal challenges.

- how do we do AI at scale? (like the internet and the web and the chip that are at scale – and growing). The challenge is data silos. Very scared to share it. What are the challenges to meet to reach scalability? Ability to have community, connect problem owners and solvers, and lastly the scaling funnel.

- Language preservation through AI. 80% of languages will disappear. Language is facing big challenges.

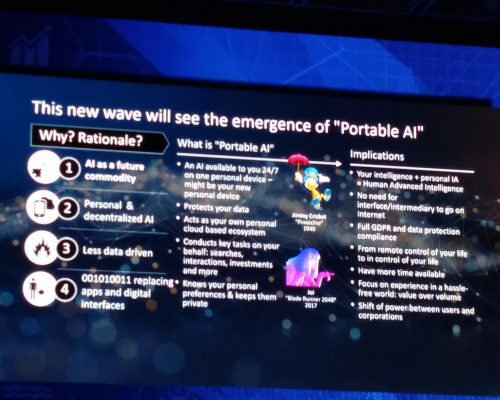

In society

The new wave will see the emergence of portable Ai. Ai as a future commodity, personal and decentralized AI, less data driven, 001010011 replacing apps and digital interfaces. We will have a new era of HAI (human augmented intelligence). We will have Intellectually augmented employees, consumers and citizens.

In Industry

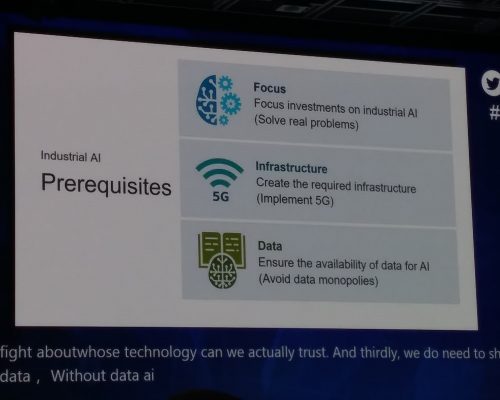

There are 3 preconditions for the use of industrial AI – prerequites:

- focus [focus investments on industrial AI]

- infrastructure {create the required infrastructure]

- and data [ensure availability of data for AI]”

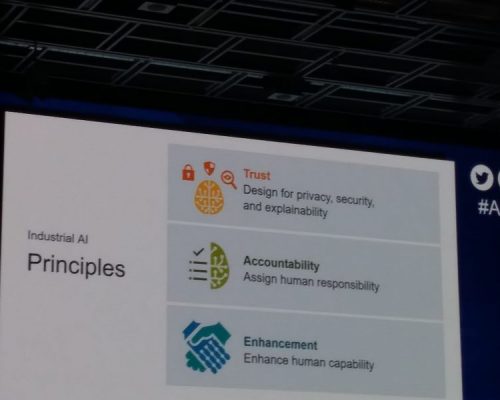

We have to work through 3 principles:

- A level of trust, design and privacy, security & explainability.

- Accountability: assign human responsibility. If ur car has a problem we know who 2 talk 2.

- Enhancement: enhance and unleash human capacity & empowerment.”

In Government

- Govs now rely on AI for decisions that impact ppl. There must be great inclusion. We will not inspire trust if we fail in lack of diversity and data and the lack of diversity in the coding itself. Responsibility of unintended consequences of AI.

- Applications of AI in digital identity systems [to expand access of social services etc.]. They could be key in development and lack of legal identity for many ppl. But it has risks. User-centric model

- Responsibility to protect vulnerable pops through non-discrimination principles.

- AI in the digital info realm and hone in on challenges of enhancing quality and diversity of digital info through the lens of unesco’s framework.

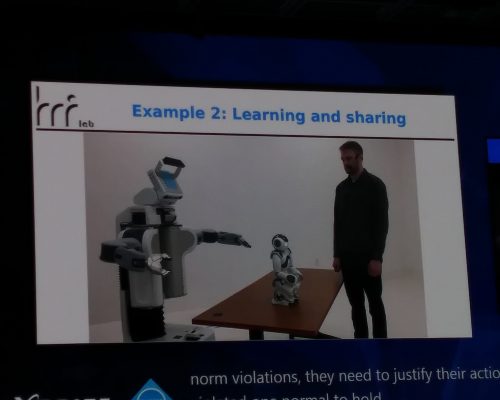

- How AI can enforce human rights and practice. Discuss governance and digital cooperation. How to combat deepfake – human norms into robots. A range of civil society orgs.

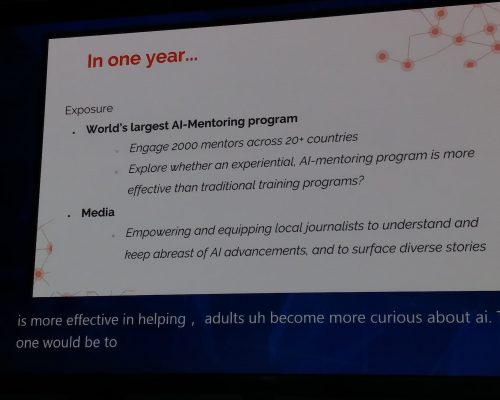

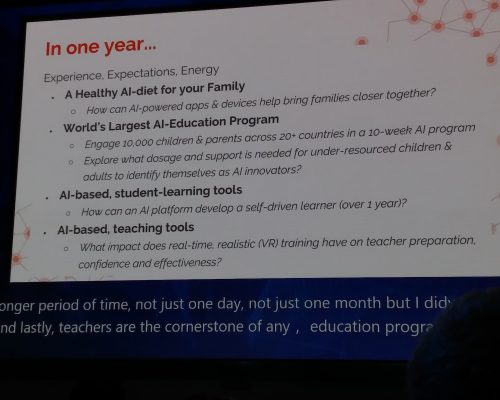

In Education

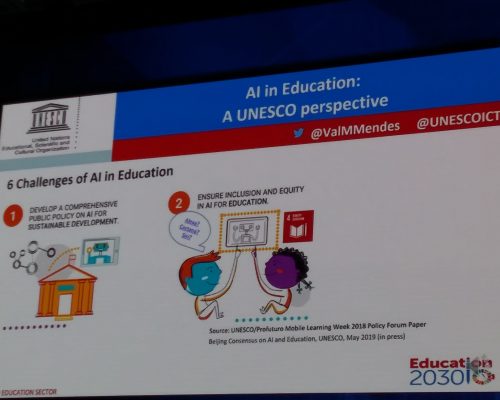

- There are 6 challenges of AI in education:

1- develop a comprehensive public policy on AI for sustainable dev.

2- Ensure inclusion and equity in AI for education.

3- Prepare teachers for an AI-powered education.

4- develop quality and inclusive data systems. We don’t have reliable data and we don’t have systems that connect to each other.

5- make research on AI in education significant.

6- ensure an ethical AI and data transparency. We don’t have an ethical approach to data. - How can we use AI to accelerate learning? How can we train students for largely AI jobs in the future. But any new tech that comes around impacts lives and impacts student lives.

In ethics

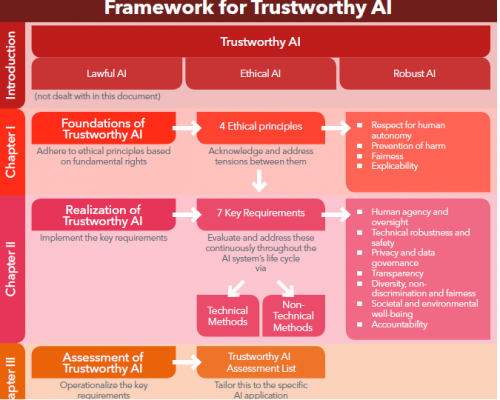

In ethics: There are 7 ethical considerations when building ADMs for vulnerable ppl:

- Inclusion of those who are most at risk. Social integration is important, employability.

- Fairness/integrity: refugee status determination. You need to appeal to fainess of the system and its integrity. Bringing attention to those cases to case officers.

- Diversity and openness: emergency preparedness.

- Transparency and dignity: registration and identity management. Dignified solutions.

In Technology

- Facial recognition and its impact on children:

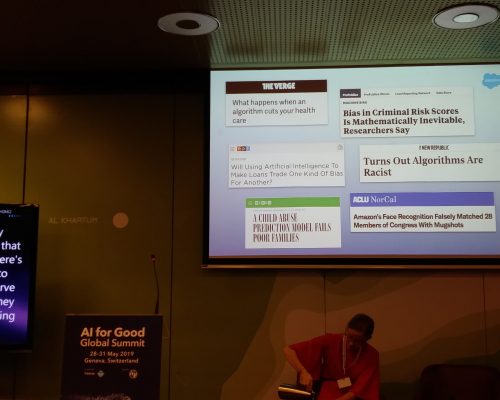

1- bias and discrimination

2- understanding and adaptability: likelihood of access to only wealthier society/children [digital divide],

3- data misuse [how do we know that data will not affect you for the rest of your life – behavior during school affects employment for example];

4- and finally the right to privacy.

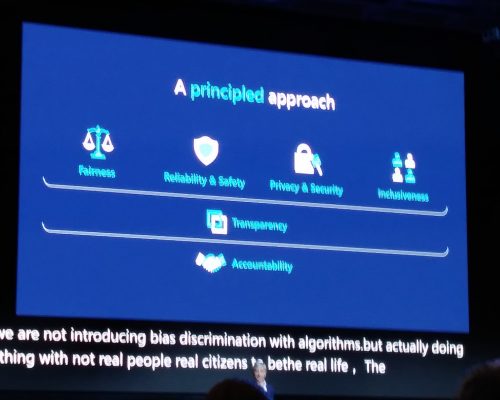

- What tech can empower & divide? What is our responsibility? Principles:

1-privacy & human rights in using data.

2-Cyber security needs a lot of partnerships. The new digital Geneva convention for cybercrime & security.

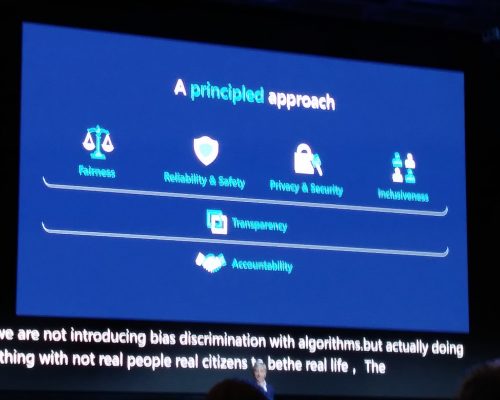

3- Need for principled approach of AI: fairness, reliability and safety, privacy and security in design, inclusiveness, transparency and accountability.

3 areas where the UN system can make an active difference:

- Formulation of AI values and standards, and to help to guide development and standards that keep it focused.

- We have a pacing problem: AI accelerating at a pace that is outstripping our ability to deal with its disadvantages. Policy makers need to catch up.

- We need nimble, decentralized work, working closely across stakeholders for the dissemination of information.

Key recommendations:

- Ensure automated decision makers and systems (ADMs) are inclusive, diverse, fair, and transparent

- The gap: put people’s best interest and needs first. Those are the ppl being impacted by the systems. Feedback is given but nothing happens.

- There needs to be an auditing mechanism and we have to be able to provide records.

- Accountability is not the question but “correctability”. The ability to correct the AI. You can’t just log it but there needs to be some action that is triggered. Cortana for example: if you swear at it will tell you it is not appropriate, then it will tell you something, then it will stop responding.

- The question of regulation and auditing is huge. How do we regulate them especially in authoritarian contexts?

- AI content filteration is problematic. It is notoriously bad when it comes to LGBT content for example.

- Ethical cultures and mind-sets within companies creating AI systems. We have to take a top down and bottom-up solutions. We can train students and employees to work ethically with AI. If there is no top down incentives it won’t work. Policies are not enough. Profits by any cost necessary is not the way to do business.

- Who are those ppl who have influence over AI systems.

- We embed rules in them, but they will mirror behavior they are exposed to. We have to remove bias from our business processes. We feed AI data. LatinX and African Am borrowers pay more in mortgage loans. It will go into the systems and get replicate it.

- Create explicit feedback mechanisms and evaluate often. News headlines: “When algorithms cut your health care.” Or “bias in criminal risk scores is mathematically inevitable, researchers say.” “A child abuse prediction model fails poor families. “ How can we change this? We are talking about human rights, access to housing etc. We have to demand that when govs use AI to make decisions that they have the correct AI/

- It takes time and effort to get it right. This is not set and forget. We have to make sure that it is doing what it should be doing and fix it.

Implications for child rights:

Bias and discrimination, understanding and adaptability, likelihood of access to only wealthier society/children [digital divide], data misuse [how do we know that data will not affect you for the rest of your life – behavior during school affects employment for example]; and finally the right to privacy.

- Automated surveillance systems: then: detect, classify, recognize. Now, identify

- Surveillance/facial recognition applications in a child’s world: under label of safety and security, we have facial recognition.

- We must have inclusive design, safety and privacy by design, awareness campaigns, regulatory framework, integrating AI national strategies. Most of the world does not have a plan or strategy in place. In national strategies, inclusion takes a back burner.

- There is a need to develop “existing and new digital identity solutions that are inclusive, trustworthy, safe and sustainable.”

- There is a need to go back to the design table and think about solutions [eg. Berners-Lee’s Solid]

- Huge data growth increases network demands. Building AI competence – working with universities to get the skills. Data scientists and not just technical knowledge. Building AI competence.

- We cannot have solutions without data. Unless governments provide data to civil society to use it – data ecosystems are, therefore, very important.

- AI is about using innovative algorithms – so we need to have good incubation in universities. Applications are tremendous and if we use the demographic young population and provided them good education and early financing – the role of gov – then we will succeed.

- People must have incentives. The mission needs to be responsive to the needs of society. People need to train for a global market. Requires initiatives and foresight and investments.

- We need to make sure we are not regulating non-personal data. We have issues of accuracy, consistency, governance. We need to monitor AI. An algorithm needs to monitor other algorithms but humans must be able to intervene because the data itself could be biased in the first place – this is where the privacy, security and bias come into play.

Three Principles

There are three principles that we need: 1- we need a level of trust, design for privacy, security and explainability.

2- Accountability: assign human responsibility. If your car has a problem we know who to talk to. We cannot have platforms that say I’m just a platform.

3- Enhancement: enhancing human capacity and empower them and unleash human capacity.

There are 3 preconditions for the use of industrial AI [prereq] :

– focus [focus investments on industrial AI]

– infrstructure {create the required infrastructure]

– date [ensure availability of date for AI]

Key quotations:

- Using robotics to build kids not using kids to build robotics. [Chris Rake]

- Raising a child is same as creating an AI system. We don not want delinquent AI systems. [Kathy Baxter, Architect, Ethical AI Practice, Salesforce]

- The ppl being impacted by the systems. We need to learn to put their interests first. [Rebeca Moreno Jimenez, Innovation Officer & Data Scientist, UNHCR Innovation Service]

- The system is watching you as much as you are watching it. [Steven Vosloo, Policy Specialist, Digital Connectivity & Policy Lab, UNICEF]

- We must not focus on the technology but on the problem we want to solve – and this builds trust in the technology. [H.E. James Kwasi Thompson, Minister of State for Grand Bahama office of the Prime Minister]

- The Code of governance is more important than code of ethics or the ‘principles’: how are companies/governments making those decisions? What is their process? [JoAnn Stonier – Mastercard]

- There is spacial apartheid. Predictive policing is a high stakes scenario, emotion recognition is high stakes scenario. We don’t need to solve problems for people but we can just breakdown barriers [such as visas] and bring them to the table. [Timnit Gebru, Lead Ethical Artificial Intelligence, Google & Co-founder, Black in AI]

- There will be diminished privacy. We can still choose otherwise. There is one route that moves towards a dark road, but there is one that leads to benefit all. Technology can help us move from hope to reality. [John Philloppe Courtois, Microsoft]

- Our AI opportunity. The world runs on software. It is embedded into hospitals, industry, banks, retail, gas. Opportunities are endless. This is an amazing time to see the economy getting digital.”

[John Philloppe Courtois, Microsoft]

Reports for further reading:

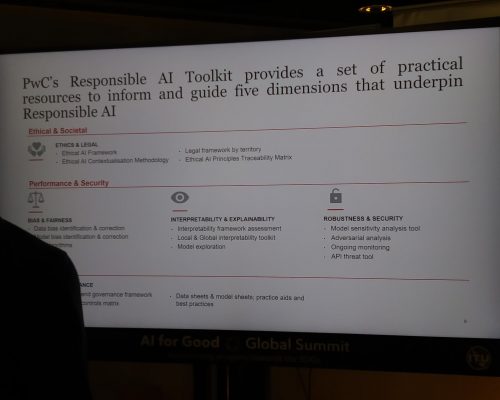

- PWC report on jobs threatened by AI.

- PWC report on ethics. [out in July]

- UNESCO’s report “Steering AI for Knowledge Societies: A ROAM Perspective”

- France: AI for Humanity.

- If I blush I could : Closing gender divides in digital skills through education– UNESCO report.